Network Interview Experience: It’s time to learn about these new features of HTTP 2.0

|

There are not many interview questions about HTTP 2.0, but there are already many applications based on HTTP 2.0, such as Google's gRPC framework, which is based on HTTP 2.0 to improve efficiency. At the same time, many defects in HTTP 1.0 have also been solved in HTTP 2.0. Therefore, if you want to stand out in the interview and better understand the implementation of frameworks like gRPC in practice, it is still very necessary to understand HTTP 2.0. Moreover, HTTP 2.0 is gradually replacing HTTP 1.0 in many scenarios. Problems with HTTP 1.0After understanding the protocol implementation of HTTP 1.0, you will find that it has many problems. Problem 1: TCP connection limit. To avoid network congestion and excessive CPU and memory usage, different browsers limit the number of TCP connections. Problem 2: Head of Line Blocking. Each TCP can only process one HTTP request at a time. The browser follows the FIFO principle. If the previous request does not return, the subsequent request will be blocked. Although the pipelining solution has been proposed, there are still many problems. For example, a slow first response will still block subsequent responses; the server needs to cache multiple responses in order to return them in order, which takes up more resources; the browser may have to reprocess multiple requests if it disconnects and retries; and the client-proxy-server must all support pipelining. Problem 3: There is too much content in the header, which needs to be sent repeatedly each time, and there is no corresponding compression transmission optimization solution; Question 4: In order to reduce the number of requests, optimization work such as file merging is required, but at the same time the latency of a single request will increase; Question 5: Plain text transmission is not secure; The emergence of HTTP 2.0In response to the problems of HTTP 1.0, RFC 7540 defines the protocol specifications and details of HTTP 2.0. The implementation of HTTP 2.0 is based on some standards of the SPDY protocol. HTTP 2.0 provides optimizations such as binary framing, header compression, multiplexing, request priority, and server push. HTTP 2.0 is the next generation Internet communication protocol based on SPDY (An experimental protocol for a faster web, The Chromium Projects). The purpose of HTTP/2 is to reduce latency by supporting multiplexing of requests and responses, reduce protocol overhead by compressing HTTPS header fields, and increase support for request priority and server-side push. What is SPDY protocolSPDY is an application layer protocol developed by Google based on the TCP protocol. Its goal is to optimize the performance of the HTTP protocol, shorten the loading time of web pages and improve security through technologies such as compression, multiplexing and priority. The core idea of the protocol is to minimize the number of TCP connections. SPDY is not a protocol used to replace HTTP, but an enhancement of the HTTP protocol. The Internet Engineering Task Force (IETF) standardized the SPDY protocol proposed by Google and launched the HTTP 2.0 protocol standard (HTTP/2 for short) similar to the SPDY protocol in May 2015. As a result, Google announced that it would abandon its support for the SPDY protocol and instead support HTTP/2. Let’s take a closer look at the new features provided by HTTP 2.0. Binary Framing LayerHow does HTTP 2.0 break through the performance limitations of HTTP 1.1, improve transmission performance, and achieve low latency and high throughput while ensuring that HTTP 1.x is not affected? One of the keys is to add a binary framing layer between the application layer (HTTP) and the transport layer (TCP). The binary framing layer is the core of HTTP 2.0 performance enhancement. HTTP 1.1 communicates in plain text at the application layer, while HTTP 2.0 divides all transmission information into smaller messages and frames and encodes them in binary format. At the same time, both the client and the server need to introduce new binary encoding and decoding mechanisms. As shown in the figure below, HTTP 2.0 does not change the semantics of HTTP 1.x, but only uses binary framing for transmission at the application layer. HTTP/2 Protocol Regarding binary framing, there are three related concepts: frames, messages, and streams. Frame: The smallest unit of HTTP 2.0 communication. All frames share an 8-byte header, which contains the frame length, type, flags, a reserved bit, and at least an identifier that identifies the stream to which the current frame belongs. The frame carries specific types of data, such as HTTP headers, payloads, and so on.

Based on frames, binary transmission replaces the original plaintext transmission, and the original message is divided into smaller data frames: HTTP/2 Protocol At the binary framing layer, HTTP 2.0 divides all transmitted information into smaller messages and frames and encodes them in binary format. The header information of HTTP 1.1 is encapsulated in the Headers frame, and the Request Body is encapsulated in the Data frame. Message: A communication unit larger than a frame. It refers to a logical HTTP message (request/response). A series of data frames make up a complete message. For example, a series of DATA frames and a HEADERS frame make up a request message. It consists of one or more frames. Stream: A communication unit larger than a message. It is a virtual channel in a TCP connection that can carry bidirectional messages. Each stream has a unique integer identifier. To prevent the conflict of stream IDs at both ends, the streams initiated by the client have odd IDs, and the streams initiated by the server have even IDs. All HTTP 2.0 communications are completed on a TCP connection, which can carry any number of bidirectional data streams. Accordingly, each data stream is sent in the form of a message, and a message consists of one or more frames. These frames can be sent out of order and then reassembled according to the stream identifier in the header of each frame. HTTP/2 Protocol The binary framing layer preserves the semantics of HTTP, including headers, methods, etc. At the application layer, there is no difference from HTTP 1.x. At the same time, all communications with the same host can be completed on a single TCP connection.

MultiplexingMultiplexing allows multiple request-response messages to be sent simultaneously over a TCP connection. The message consists of frames, and each frame of data is marked with a stream ID. When the other party receives the message, it splices all frames of each stream according to the stream ID to form a complete piece of data. This is HTTP/2 multiplexing. The concept of streams enables multiple requests and responses to run in parallel on a single connection, solves the "head of line blocking" problem, and reduces the number of TCP connections and the problem of slow TCP connection start. Therefore, HTTP/2 only needs to create one connection for the same domain name, instead of creating 6 to 8 connections like HTTP/1. It should be noted that data from different streams can be sent alternately, but data from the same stream can only be sent sequentially. Server PushThe client sends a request, and the server returns multiple responses in advance based on the client's request, so that the client does not need to initiate subsequent requests. In other words, in HTTP/2, the server can send multiple responses to a client's request. The server pushes resources to the client without the client's explicit request. As shown in the figure below, the client requests Stream 1 (/page.html), and the server pushes Stream 2 (/script.js) and Stream 4 (/style.css) while returning the Stream 1 message: HTTP/2 Protocol If a request is sent by the home page, the server may respond with the home page content, logo, and style sheet, because it knows that the client will use these. This not only reduces the redundant steps of data transmission, but also speeds up the page response and improves the user experience. Server push is mainly an optimization for resource inlining. Compared with HTTP/1.1 resource inlining, it has the following advantages:

Disadvantages of push: All pushed resources must comply with the same-origin policy. In other words, the server cannot push third-party resources to the client casually, but must be confirmed by both parties. Header Compression (HPACK)HTTP/1.1 does not support HTTP header compression, so SPDY and HTTP/2 came into being. SPDY uses a general algorithm, while HTTP/2 uses an algorithm specifically designed for header compression (HPACK algorithm). HTTP/2 Protocol HTTP protocol is stateless, and each request must be accompanied by all information (describing resource attributes), and the retransmitted data can reach hundreds or even thousands of bytes. Therefore, many request header fields are repeated, such as Cookie. The same content must be attached to each request, which wastes a lot of bandwidth and affects the speed. In fact, for the same header, it only needs to be sent once. HTTP/2 optimizes this point and introduces a header information compression mechanism. On the one hand, the header information is compressed using gzip or compress before being sent; on the other hand, the client and the server simultaneously maintain a header information table, all fields are stored in this table, and an index number is generated. After that, the same field will not be sent, and only the index number needs to be sent. Request PriorityAfter dividing HTTP messages into many independent frames, the performance can be further optimized by optimizing the interleaving and transmission order of these frames. In HTTP/2, each stream can set dependencies and weights, and can assign priorities according to the dependency tree, solving the problem of key requests being blocked. Application layer reset connectionFor HTTP/1, the reset flag in the TCP segment is set to notify the other end to close the connection. This method will directly disconnect the connection, and the next request must be re-established. HTTP/2 introduces the RST_STREAM type frame, which can cancel the stream of a request without disconnecting the connection, which performs better. Flow ControlThe TCP protocol uses the sliding window algorithm to control flow. The sender has a sending window and the receiver has a receiving window. The flow control of HTTP/2 is similar to the receive window. The data receiver notifies the other party of the flow window size in bytes, indicating the amount of data that can be received. Only Data type frames have flow control function. In this way, the other end can be restricted from sending data. For each stream, both ends must tell the other end that they still have enough space to process new data, and the other end is only allowed to send so much data before the window is expanded. summaryEvery new protocol needs a process to be popularized, and HTTP/2 is no exception. Fortunately, it is an upper-layer protocol, and it only adds a layer between HTTP/1 and TCP. It is inevitable that it will be gradually used. After understanding these new features of HTTP/2, you may understand why more and more browsers and middleware are beginning to adopt HTTP/2. Because it is indeed very efficient and worth learning and using. |

<<: How many people are using invalid 5G? The price has doubled, and the experience has become worse

>>: In addition to 404, what other "codes" are there for web pages?

Recommend

What is the difference between a cell and a sector? What about carrier frequency and carrier wave?

Cell, sector, carrier and carrier frequency are a...

Kubesphere deploys Kubernetes using external IP

Our company has always had the need to connect al...

What secrets do you not know about the spanning tree protocol?

1. Spanning Tree Protocol (STP) Compaq was long a...

Kunpeng spreads its wings and walks with youth | Kunpeng University Tour is about to enter Shanghai Jiao Tong University, exciting "spoilers" in advance!

At 1:30 pm on December 4, the Kunpeng University ...

Want to handle tens of millions of traffic? You should do this!

Your boss asks you to handle tens of millions of ...

Let’s talk about deterministic networks

Low latency in the network is particularly import...

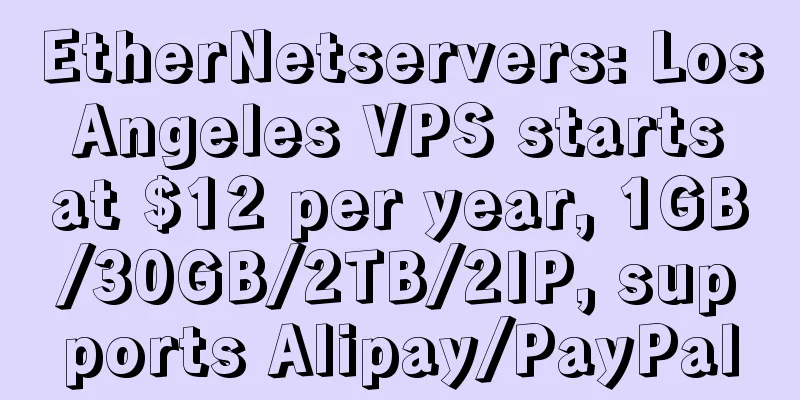

UCloud Summer Big Offer: Kuaijie Cloud Server starts from 47 yuan/year, price guaranteed for Double 11

UCloud has recently launched a global cloud servi...

Enterprises need to prioritize mobile unified communications

The need for secure, reliable, and easy-to-use co...

Discussion | Technical advantages of the top 9 leading SD-WAN providers abroad

SD-WAN technology helps make wide area networks m...

Inventory: Excellent NaaS providers in 2021

NaaS, short for Network as a Service, is a servic...

The first step in learning networking: a comprehensive analysis of the OSI and TCP/IP models

Hello, everyone! I am your good friend Xiaomi. To...

RAKsmart popular servers start at $30/month, 1Gbps unlimited traffic servers start at $99/month, US/Hong Kong/Korea/Japan data centers

RAKsmart has started its promotion this month. It...

Three-minute review! A quick overview of 5G industry development trends in December 2021

After the rapid development in 2020, 2021 is a cr...

Yecaoyun Hong Kong High Defense VPS, starting from 122 yuan/month-dual core/2GB/15G SSD/5M/50G defense

Yecaoyun recently launched a new high-defense VPS...

Friendhosting Summer Promotion: All VPSs are as low as 55% off, and unlimited traffic for 10 data centers for half a year starts from 8 euros

Friendhosting sent a promotional email yesterday ...

![[11.11] BGP.TO Japan Softbank/Singapore CN2 server 35% off monthly payment starting from $81](/upload/images/67cac25445835.webp)