What is edge computing in IoT?

|

The growing number of “connected” devices is generating an excessive amount of data, and this will continue as Internet of Things (IoT) technologies and use cases grow in the coming years. According to research firm Gartner, by 2020, there will be as many as 20 billion connected devices generating billions of bytes of data per user. These devices are not just smartphones or laptops, but also connected cars, vending machines, smart wearables, surgical medical robots, and more.

The large amount of data generated by countless types of such devices needs to be pushed to a centralized cloud for retention (data management), analysis, and decision-making. Then, the analyzed data results are transmitted back to the device. This round trip of data consumes a lot of network infrastructure and cloud infrastructure resources, further increasing latency and bandwidth consumption issues, thus affecting mission-critical IoT use. For example, in self-driving connected cars, a large amount of data is generated every hour; the data must be uploaded to the cloud, analyzed, and instructions sent back to the car. Low latency or resource congestion may delay the response to the car, which may cause traffic accidents in serious cases. IoT Edge Computing This is where edge computing comes in. Edge computing architecture can be used to optimize cloud computing systems so that data processing and analysis are performed at the edge of the network, closer to the data source. With this approach, data can be collected and processed near the device itself, rather than sending it to the cloud or data center. Benefits of edge computing:

The advent of edge computing does not replace the need for traditional data centers or cloud computing infrastructure. Instead, it coexists with the cloud as the computing power of the cloud is distributed to endpoints. Machine Learning at the Network Edge Machine learning (ML) is a complementary technology to edge computing. In machine learning, the generated data is fed to the ML system to produce an analytical decision model. In IoT and edge computing scenarios, machine learning can be implemented in two ways.

Edge computing and the Internet of Things Edge computing, together with machine learning technology, lays the foundation for the agility of future communications for IoT. The upcoming 5G telecommunication network will provide a more advanced network for IoT use cases. In addition to high-speed and low-latency data transmission, 5G will also provide a telecommunication network based on mobile edge computing (MEC), enabling automatic implementation and deployment of edge services and resources. In this revolution, IoT device manufacturers and software application developers will be more eager to take advantage of edge computing and analytics. We will see more intelligent IoT use cases and an increase in intelligent edge devices. Original link: http://www.futuriom.com/articles/news/what-is-edge-computing-for-iot/2018/08 |

Recommend

China has 600,000 5G base stations. Why should 5G investment be moderately ahead of schedule?

In the popular movie "My Hometown and Me&quo...

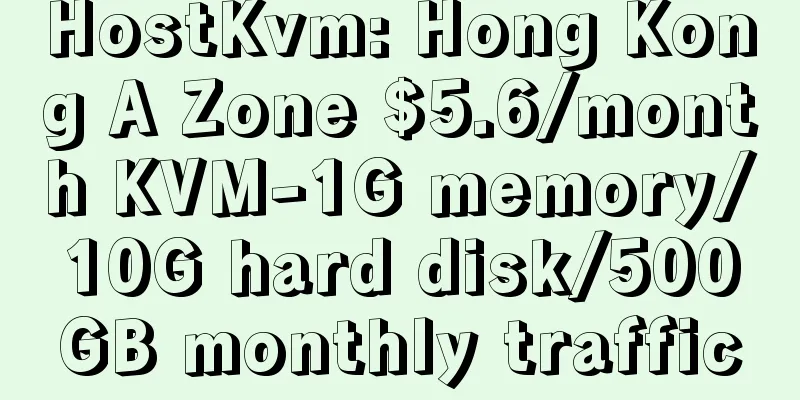

Maxthon Hosting: Los Angeles CN2 GIA/Hong Kong CN2 Line VPS Monthly Payment Starting from 54 Yuan, Dual Core/2G Memory/30G SSD/100M Bandwidth

Aoyo Hosting has not launched a new promotion for...

JustVPS: 30% off UK VPS/20% off all VPS, unlimited traffic in multiple data centers in the United States/France/Singapore/Russia/Hong Kong, China

JustVPS.pro bought a VPS in London, UK, last Dece...

Before the 5G feast, the operators' tight restrictions have been booked

At the 2019 Mobile World Congress held in Barcelo...

Flash is going to be discontinued, but new technologies in the 5G era will leave you no time to recall the past

I saw a piece of information that Adobe said it w...

How to Evaluate DCIM Tools for the Modern Data Center

There are almost too many data center infrastruct...

BriskServers: $7.8/mo-AMD Ryzen9 7950x/4GB/80GB/Unlimited data @ 10Gbps/Ashburn

BriskServers was founded in 2021 by a group of ga...

Beat 5G! German team achieves the fastest wireless transmission to date, 14G data per second

Although 5G has not yet become popular, scientist...

Liu Guangyi: Spectrum unification promotes early commercial use of 5G and smooth evolution of 4G networks to 5G

5G has become the focus of major exhibitions, but...

Justg: South Africa cn2 gia line (three networks) VPS annual payment starting at $39.99, 500M bandwidth, KVM architecture

Justg is a relatively new foreign VPS service pro...

Have you used 5G? Wang Jianzhou: 6G network will be commercially available in 2030

According to Sina Technology, at the 2021 Technol...

Three-layer network model of Internet products

If you ask what is the biggest feature of Interne...

Four Key Words for Successful Large-Scale Deployment of IPv6

【51CTO.com original article】In 2019, IPv6 transfo...

Enabling sustainable development in smart cities through Wi-Fi

What is the definition of a smart city? The answe...

iWebFusion: Starting from $45/month E3-1230v2/16GB/2TB/10TB@1Gbps, multiple data centers in Los Angeles and other places

iWebFusion (iWFHosting) is a site of H4Y, a forei...

![[Black Friday] spinservers: $270 off San Jose high-end servers, dual E5-2683 v4, 512G memory, 2*3.83T SSD, 10Gbps bandwidth](/upload/images/67cac499cea2f.webp)